Building a Better Game Review

The first and easiest target, of course, is rampant score inflation. The average game seems to score somewhat over a 7 by most modern, popular review sources. To start, we can ask, why would they do this? It happens for a couple of faulty reasons: 1. To appease developers and 2. By objectively comparing current games with older ones. Developers like inflated scores because they see higher scores as increased sales. Thus if everything gets pushed near the top, they believe that this benefits them as the customers would naturally relate high with good, perhaps not realizing that scores are inflated. Many of the review sources willingly follow along with this, as publishers and developers are their livelihood. They need them happy for ad revenue, sneak peeks, open accessibility and early/free games. Many feel this pressure even without direct hints from the publisher and willingly bow to it. Then we also have the other problem, objective comparisons to previous games. Say a big developer created a game and it ended up a hit. A reviewer first gave it an 8.5. Now two years later, the developers release a sequel which looks better and fixes some of the issues in the first. What do you give it? It's very possibly that the reviewer will give a score higher than the original 8.5 because it's a better game than the original. Of course, this isn't necessarily the proper way of doing things as you're mentally going back in time and comparing it in the previous time period instead of staying rooted in the present. The concept is a bit hard to confront and counter, but it needs to be addressed for proper judgement.

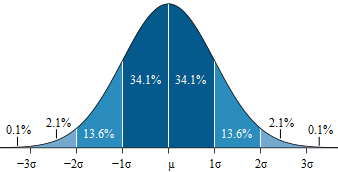

But what's so bad about score inflation (and closed inflation in general) anyway? Essentially, its entire purpose is to mislead and manipulate, generally to make a specific group appear better than they are at the expense of the entire system's legitimacy. Unfortunately, similar kinds of closed inflation exist everywhere in our modern world ( closed inflation has values on a range versus open-ended inflation, which doesn't carry the same issues). Take the scale 0-10, with a defined low, a defined high, and a defined mid-point (five). If five is a true average for this scale and you throw 100 random dots (or reviews) on the line, you generally end up with a nice bell curve density to show for it. This allows you to easily determine, both mentally and objectively, which scores are horrible, bad, average, good, and great. In the end, isn't that all people want to know from a review score? With a skewed curve, the results become misleading, if not downright manipulative. Suddenly it becomes much more difficult to determine the difference between horrible, average and great as their separation is significantly reduced. This problem also becomes more complicated as inflation isn't even standard amongst inflation sources, which then have to coexist with non-inflated scores.

closed inflation has values on a range versus open-ended inflation, which doesn't carry the same issues). Take the scale 0-10, with a defined low, a defined high, and a defined mid-point (five). If five is a true average for this scale and you throw 100 random dots (or reviews) on the line, you generally end up with a nice bell curve density to show for it. This allows you to easily determine, both mentally and objectively, which scores are horrible, bad, average, good, and great. In the end, isn't that all people want to know from a review score? With a skewed curve, the results become misleading, if not downright manipulative. Suddenly it becomes much more difficult to determine the difference between horrible, average and great as their separation is significantly reduced. This problem also becomes more complicated as inflation isn't even standard amongst inflation sources, which then have to coexist with non-inflated scores.

Then how exactly do we counter score inflation? Well, the answer lies with the public, the review sources and importantly, with aggregrate review sites such as gamerankings and metacritic. The public can always make a difference if they're willing to take a stand and care about an issue. They readers are the ones ultimately in control of letting these sources sink or swim, so their influence is even greater than inflation pressures if they can collectively make their voices and desires heard. The review sources themselves are also more than capable of making a point to use a realistic scale instead of an inflated one. Pushing an overall average from say, 7.5 to 6.5 is a huge step on its own and shouldn't be overly difficult to work into their systems. Lastly, we can intelligently use aggregate sites to lessen the effect of inflation in a couple of ways. The first method is to just combine scores from as many sites as possible and then using the results to display relative rankings of the games, which would then be emphasized over each individual score. The second method is to use average review data from each source and then re-calculating a 'deinflated' average curve, with the middle around 5 (or 5.5 on a 1-10 scale). By reweighting each site and then adding them to the mix, the ratings are immediately drastically improved and more meaningful, although this method still isn't as optimal as actual deinflation.

A second giant red target in the review world is the undue focus and importance on review scores versus the actual text. Review scores mean something, but in the end each score is either a rigidly objective number (which doesn't necessarily help the end reader) or a more entirely subjective number (which also doesn't necessarily help the end reader). Reading a full, well-written review certainly helps the reader decide if a product matches their tastes more than numerical methods ever could. However, I'm not going to pretend for a moment that importance of scores are an easy thing to change, as the modern consumerist society by and large gravitates towards wanting their information in small, short, concise and easy-to-understand packages. Thus in the end, it all comes down to presentation and how the publishers decide to display scores in contrast to the review text itself. Do they make a big deal of the score and paste it around several places; perhaps at the bottom of a page, at the top, and then again at the front or the back? This can certainly be toned down. I personally favor an approach like Edge-Online, which displays only a simple text number at the end of the page. This re-emphasizes the text and encourages readers to take note of the entire rather than just skimming to the shiny score wrap-up boxes at the bottom.

I'd also like to see a rehaul of the subcategories so commonly found at the bottom of each review near the score. You know the ones: graphics, sound, fun factor, gameplay, etc. Again, I'm not saying that summaries are inherently bad things, but I feel that we could think of better common categories that more relate to what really impacts people's opinions of a game. Thus I will propose four summary categories: Style/Atmosphere, Technical Aspects, Gameplay and Longevity. Style/atmosphere is a natural combination of graphics, sound, force feedback and more. It goes far beyond raw polygon counts. Style and atmosphere are the sum of the immersive effects that make or break a player's final impression on the world the developers created for us. Pumping more polygons is relatively easy. Creating a good style and immersive atmosphere are not. Technical is just that, a summary of the technical aspects of the game. Is anything blantatly broken/glitchy? Is the game created in a manner to allow the style and atmosphere and gameplay to shine through? Modern games these days are very complex and while gamebreaking glitches are relatively rare, any amount of small glitches or technical issues may still frustrate gamers into putting the game away, possibly forever. Technical aspects can also include audio/video features and pure prowess for those who look for such things (eg: native 1080p rendering with full 5.1 sound in-game and cutscene). Gameplay is simply how entertaining the game is while interacting with the player. For example, say you have an fps with an item system. How fun are the shooting moments throughout the game? How fun are the menu moments? What about the exploration time? Do they interact together well? Or perhaps fall apart or don't mesh well at times? Last, we have longevity, the measure of how long a game will be able to suitably entertain. A well-balanced game with a strong leaderboard system and easy-to-use party/room systems has the capability to entertain for quite some time. If it is a score attack type of game, then how balanced is the score system? Games that are easy to learn and hard to master are optimal, as they're fun to pick up and play yet can be deep enough to allow players to continually learn and improve for a long time, up to their limits. These four categories seem to nail what gamers look for far more accurately than most summary category, which are often downright vague or even bizarre.

Overall, I suppose my main hope and desire is to take the rigid objectivity out of game reviewing and replace it with things more useful to the customer to aid in their decision making. The way I see it, subjectivity (and even complete subjectivity) is quite fine with the proper use of aggregate review sites. I'm not saying our current aggregate sites are anywhere close to perfect, but it's a nice start. The sum of many subjective ideas will become a concrete objective result, and one that's far more in line with the public.